PROBLEM LINK:

Practice

Contest: Division 1

Contest: Division 2

Contest: Division 3

Contest: Division 4

Author:

Tester: sushil2006

Editorialist: iceknight1093

DIFFICULTY:

Simple

PREREQUISITES:

Harmonic series lemma

PROBLEM:

For a sequence B of length M, define f(B) := M + \gcd(B_1, B_2, \ldots, B_M).

You’re given an array A. Find the maximum value of f(B) across all subsequences of B.

EXPLANATION:

The expression we wish to maximize is the length plus the GCD of the subsequence.

Suppose we fix g to be the GCD of the subsequence.

Then, every element of the chosen subsequence must be a multiple of g.

Clearly, it’s best to just take every element that’s a multiple of g into the subsequence, in an attempt to maximize its length: if the GCD does equal g, great — otherwise the GCD will be a multiple of g, giving us an ever larger value!

Of course, the subsequence must be non-empty for this to be valid.

We now already have a (very slow) algorithm to compute the answer: fix a value g that’s a factor of at least one element of A, then maximize the answer with g plus the number of multiples of g.

However, checking all g is definitely impossible so we need to do better.

Let \text{mx} = \max(A).

Observe that choosing g = \text{mx} already gives an answer of at least \text{mx} + 1.

This means g \lt \text{mx} + 1 - N can never be optimal: at best we find a subsequence of length N, but even then the sum isn’t exceeding \text{mx}+1.

This means we really only have N possible candidates for g, that being each g \in [\text{mx}-N+1, \text{mx}].

For each g among these, we’d like to know how many elements of A are divisible by it.

To compute this quickly, rather than iterate through every element of the array, we use an idea similar to the sieve of Eratosthenes:

- Let \text{freq}[x] denote the number of occurrences of x in A.

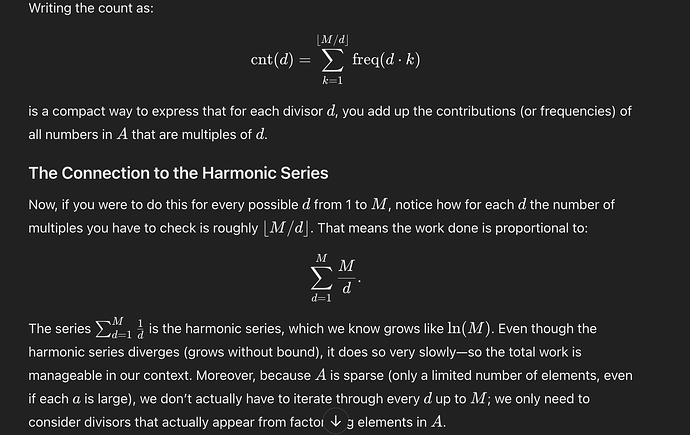

- Then, the number of multiples of g in A is given by \text{freq}[g] + \text{freq}[2g] + \text{freq}[3g] + \ldots, where the summation is across all k\cdot g \leq \text{mx}.

The time complexity of this approach can be analyzed in similar fashion to the sieve of Eratosthenes: with g = \text{mx - N + i}, we’ll check at most \left\lfloor \frac{N}{i} \right\rfloor multiples of g, so the number of times we need to look up \text{freq} is bounded by

where the logarithmic bound comes from the harmonic series.

The true complexity is \mathcal{O}(N\log N) multiplied by the complexity of looking up \text{freq}, which is:

- \mathcal{O}(\log N) if you use a map (

std::mapin C++,TreeMapin Java), or a binary search to count. - Expected \mathcal{O}(1) time if you use a hashmap (

std::unordered_mapor a variant in C++,HashMapin Java,dictorcollections.Counterin Python). - True \mathcal{O}(1) time if you use an array.

For this, note that only frequencies of elements within N of \text{mx} matter, so we can pretend these elements are shifted to [0, N] instead and use a normal frequency array.

All three methods should work, though if you use the first and have an extra log it might be close depending on implementation.

TIME COMPLEXITY:

\mathcal{O}(N\log N) per testcase.

CODE:

Editorialist's code (PyPy3)

for _ in range(int(input())):

n = int(input())

a = list(map(int, input().split()))

mx = max(a)

freq = [0]*(n+1)

for x in a:

if mx-x <= n: freq[mx-x] += 1

ans = mx + freq[0]

for i in range(n):

x = mx - i

if x <= 0: break

ct = 0

for y in range(x, mx+1, x):

ct += freq[mx-y]

ans = max(ans, x + ct)

print(ans)